Power BI Write-Back to Azure SQL Database using Fabric User Data Functions

- Vojtěch Šíma

- Jan 12

- 11 min read

tl;dr Fabric's User Data Functions allow you to connect to items outside the Fabric environment, as long as you have the network and credentials ready. To enable write-back to an Azure SQL Database, you need a Private Endpoint, a Service Principal, and Azure Key Vault. See the blog to know how to implement everything.

Can a Fabric User Data Function Interact with Non-Fabric Items?

Yes. Azure SQL Database, SharePoint, or virtually any API can be triggered from a Fabric User Data Function (UDF), provided you can write the logic in Python and configure the necessary network or credentials. Note that the latter two can be quite a challenge.

For first-time readers: a User Data Function (UDF) is a Microsoft Fabric item that allows you to define a Python script that runs on demand. Ideally, this is triggered directly from a Power BI report (or at least, that's the most interesting use case). While UDFs make it very easy to interact with other Fabric items (such as Fabric SQL Databases, Lakehouses, or Warehouses), with enough determination, you can bend them to your specific needs.

To read more about UDF basics, check my initial blog post about it, where I cover an easier use case (writing to a Fabric SQL Database) and provide a step-by-step guide on setting up everything from scratch (UDF, Power BI, and governance).

Write-back to Azure SQL Database

Now, why would you want to go the extra mile to write back to an Azure SQL Database when you can do everything natively with a Fabric SQL Database?

The major reason is cost. If you are on a small capacity (like an F2), every single CU counts. If you want instant write-back, and why wouldn't you, using Fabric SQL might cost you extra because you have to pay to keep the capacity active for the SQL endpoint, just in case someone writes back at 1:00 AM. And generally, it's gonna more expensive to run a database in Fabric than Azure.

Azure SQL Database, on the other hand, can be technically free. For the write-back use case I will cover, Microsoft’s free Azure SQL Database offer is sufficient. While it has limitations, writing a few hundred rows is well within its parameters. Additionally, it enables you to:

Use the database completely outside of Fabric (e.g., for other operational reports).

Keep everything tidy in one place if your ecosystem is already Azure-heavy.

I know Fabric's goal is to have everything in one place, but if you're not starting from scratch, and you're already living in Azure eco-system, you can make it easy for yourself staying there.

Just before we start, the setup may feel overwhelming, but it's not that complicated. But you will need patience, in case you're not an Azure Portal admin.

Prerequsities

To make this work, we need a specific setup. If you don't have the rights to perform "shenanigans" in the Azure Portal, grab a friend who does.

Service principal

Azure SQL Server & configuration

Supporting Azure settings

New workspace with Fabric Capacity (any)

Managed private endpoint

Azure Key Vault (optional)

Variable library (optional)

Service principal

Let's start with the easiest part. The Service Principal will be the entity writing to our database. Apart from granting it read/write access via SQL later, you don't have to do much here.

In the Azure Portal, go to App registrations.

Click New registration, fill in the name, choose "Single Tenant" (usually), and click Register.

Once registered, go to Certificates & secrets.

Click New client secret, give it a description, choose an expiration, and click Add.

Important: Copy the Value immediately and save it temporarily (we will move it to Azure Key Vault later). Once you leave this page, you will never see this secret again.

Finally, go to the Overview tab and copy the Application (client) ID and Directory (tenant) ID.

Azure SQL Server & configuration

I will assume you already have an Azure SQL Server. If not, here is how to get the free version.

In the Azure Portal, go to Services -> Azure SQL.

In the overview, scroll to Azure SQL Database and click Try for free.

Provide the details (Subscription, Resource Group, Database Name).

For the server, select the region where your other resources live. (Note: I had issues with some Europe regions, so I used West US 2).

You will then be forwarded to Deployment; this should be automatic. If you have any issues during the deployment, it's very likely a bad region or insufficient rights.

Network Configuration: Once deployed, open your Server resource, go to Security -> Networking, and ensure Public Access is set to "Selected networks".

Lastly, check the box to Allow Azure services and resources to access this server.

Every time you connect from a device, you will get a firewall rule error, and you need to be added here.

Access in Azure SQL Database

Once you have access to your database, create a new table or alter an existing one, and add db_datawriter and db_datareader to the principal service account.

CREATE USER [article-demo-sql-writer] FROM EXTERNAL PROVIDER;The name must be identical to the service principal name. Then grant read/write:

ALTER ROLE db_datareader ADD MEMBER [article-demo-sql-writer];

ALTER ROLE db_datawriter ADD MEMBER [article-demo-sql-writer];These roles should exist automatically.

Supporting Azure settings

Depending on the state of your Azure, you may have to tinker around with a couple of settings. Naturally, you have to have the rights to modify the subscription, Contributor should do, but if you don't have your own company or you're not an Azure admin, you will probably have to ask someone.

The hardest part will be the network setup. I struggled with missing configuration in the subscription itself, specifically in Resource providers.

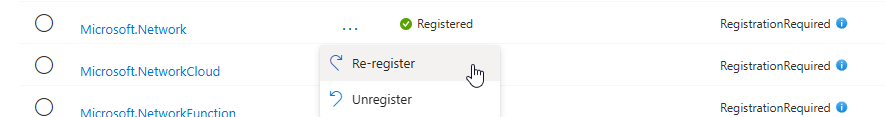

Go to your Subscription, click Settings, then Resource providers.

Check for Microsoft.Network, Microsoft.Fabric, and Microsoft.ManagedNetworkFabric.

Make sure the status is set to Registered. It's likely it won't be. You register it by clicking the three dots and registering it.

New workspace with Fabric Capacity (any)

Why a new workspace? Well, we will be setting up the private endpoint, and it brings some problems with it. For example, if you use notebooks, because you will be running on a private subnet, getting a new pool for your PySpark or plain Python will be affected. The starting time for each (even non-Spark) can take several minutes. This is quite annoying, especially if you're using non-Spark notebooks and you're used to instant session starts, or you use a Starter Pool that's also optimized for fast session starts.

This is one of the issues it can bring, so I recommend isolating it. Fortunately, the UDF lives in a completely different environment and it's not affected by session starts, so even with this, you can achieve instant write-back.

Naturally, the UDF is a Fabric item, and so are Managed Private Endpoints, so you need a Fabric capacity. The good news is that currently, it should work from Fabric F2 and trial capacities.

Since you're creating a new workspace, make sure you also have enabled settings like Users can create Fabric Items in the Power BI Admin Portal, etc.

Managed private endpoint

Once you have your workspace, go to Workspace settings and find Outbound networking.

Here, create a New managed private endpoint.

Fill the field accordingly.

Name and request message are up to you.

Resource identifier is the SQL Server. The resource path has to be strict, and it's in this format:

/subscriptions/{subscriptions-guid}/resourceGroups/{display-name}/providers/Microsoft.Sql/servers/{display-name}To easily get the path: in the Azure Portal, go to your SQL Server resource, check out the browser URL, and copy the part mentioned. Make sure it starts with the initial slash.

Once you fill it in, in real-time, the Target sub-resource will become available for you, and you can choose 'Azure SQL Database'.

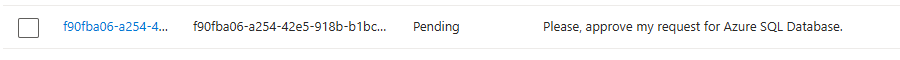

If you did everything correctly, this will appear after pressing Create.

To approve this:

Go to the Azure SQL Server resource in the Azure Portal.

Go to Security, Networking, then Private Access.

You should see your request.

Approve it.

Then go back to your workspace settings and refresh the request; it should be approved.

Azure Key Vault (optional)

Azure Key Vault is purely optional, but highly recommended. This will allow you to skip hardcoding values into the UDF code and just connect to Azure Key Vault and retrieve them more safely. If you still have your service principal information in your clipboard, let's build it; otherwise, go get that information first.

In the Azure Portal, go to Key Vaults, choose your key vault, or create a new one (you need the most basic one).

Then go to Objects, Secrets, and Generate/Import three secrets.

If this action is disabled, you lack rights. Your account (your personal account that will run the UDF) needs Key Vault Contributor (or at least Key Vault Secrets User) if someone else will create the secrets for you.

Create three secrets:

tenant-id

article-demo-sql-writer-secret

article-demo-sql-writer-client-id

Obviously, the name is up to you

Variable library (optional)

If you feel extra spicy today, you can make the whole setup more manageable using a Variable library. We can store the Azure SQL Server URL, database name, and the Azure Key Vault URL.

Important note: In my testing, using a Variable Library in connection with Managed Private Endpoints introduced significant latency. There is likely a one-minute waiting time which eventually fails, and then the process continues quickly. At this time, I didn't find a nice workaround that wouldn't be overkill. So, I will also show you code where you hardcode the variable information; however, these are not security issues, as this is just configuration data. The whole udf call should take up to 5 seconds (latency when using variable library is one minute).

In your workspace, click New item, Variable library.

Click New variable.

Let's create three of them:

azure-key-vault-url

writeback-azure-sql-url

writeback-azure-sql-database-name

You can find all this information in the respective resources in the Overview tab, under Vault URI, Server name, and the names of available databases.

Click Save.

And that's it for the prerequisites. Let's build the actual User Data Function.

Write-back to Azure SQL Database User Data Function

Finally, what you came for. The overall setup will be like this: we will insert a new row every time we run this function, with user-provided information about some entity ID and a comment. That's kinda it.

For the technical stack, we will use specific libraries:

pyodbc

azure-identity

azure-keyvault-secrets

In your workspace, create a new item, it's called User Data Functions.

Click New function. Then, go to Library management, and let's import the libraries we need.

Click Add from PyPI and select the following libraries. The version you use is up to you; this is a public library, and it's not vetted by Microsoft itself unless they are the provider. So, this part is up to you. (Although two of them are created by Microsoft, and the third one is recommended by them in the docs, so.)

Then we need to publish and install the libraries. Click Publish. This may take a couple of minutes

Once it's published, make sure you refresh your browser, and then you can start using the installed libraries.

To use the Variable library in user user-friendly way, thanks to the November 25 update, we can add this fabric item using Manage connections. So do the following.

Click Add connection and choose the Variable Library you created a couple of steps back.

Then click Edit on the right and give it a nicer alias. Since you can only use letters, let's do something like 'varlib'.

Then copy the code (one of these variants, my pick is the one without the Variable library) and then click Publish.

User Data Function call with Variable library

import fabric.functions as fn

import pyodbc

import struct

from azure.keyvault.secrets import SecretClient

from azure.identity import ClientSecretCredential

udf = fn.UserDataFunctions()

@udf.connection(alias="varlib", argName="varLib")

@udf.generic_connection(argName="keyVaultClient", audienceType="KeyVault")

@udf.function()

def azure_sql_serer_writeback(varLib: fn.FabricVariablesClient, keyVaultClient: fn.FabricItem, itemId: str, comment: str)-> str:

key_vault_url = varLib.getVariables().get("azure-key-vault-url")

azure_sql_server_name = varLib.getVariables().get("writeback-azure-sql-url")

azure_sql_database_name = varLib.getVariables().get("writeback-azure-sql-database-name")

try:

key_vault_credentials = keyVaultClient.get_access_token()

client = SecretClient(vault_url=key_vault_url, credential=key_vault_credentials)

tenant_id = client.get_secret("tenant-id").value

article_demo_sql_writer_client_id = client.get_secret("article-demo-sql-writer-client-id").value

article_demo_sql_writer_secret = client.get_secret("article-demo-sql-writer-secret").value

sql_cred = ClientSecretCredential(tenant_id, article_demo_sql_writer_client_id, article_demo_sql_writer_secret)

token_obj = sql_cred.get_token("https://database.windows.net/.default")

token_bytes = token_obj.token.encode("UTF-16-LE")

token_struct = struct.pack(f'<I{len(token_bytes)}s', len(token_bytes), token_bytes)

conn_str = (

"DRIVER={ODBC Driver 18 for SQL Server};"

f"SERVER={azure_sql_server_name};DATABASE={azure_sql_database_name};"

"Encrypt=yes;TrustServerCertificate=no;ConnectRetryCount=3;"

)

with pyodbc.connect(conn_str, attrs_before={1256: token_struct}) as conn:

conn.timeout = 5

cursor = conn.cursor()

cursor.execute(

f"""

INSERT INTO [schema].[table] ([column1], [column2], [column3])

VALUES (?, ?, GETDATE())

""",

(itemId, comment)

)

return f"OK"

except Exception as e:

return f" ERROR: {str(e)}"User Data Function call without Variable library

import fabric.functions as fn

import pyodbc

import struct

from azure.keyvault.secrets import SecretClient

from azure.identity import ClientSecretCredential

udf = fn.UserDataFunctions()

@udf.generic_connection(argName="keyVaultClient", audienceType="KeyVault")

@udf.function()

def azure_sql_serer_writeback(keyVaultClient: fn.FabricItem, itemId: str, comment: str)-> str:

key_vault_url = "https://yours.vault.azure.net/"

azure_sql_server_name = "azure-sql.database.windows.net"

azure_sql_database_name = "database-name"

try:

key_vault_credentials = keyVaultClient.get_access_token()

client = SecretClient(vault_url=key_vault_url, credential=key_vault_credentials)

tenant_id = client.get_secret("tenant-id").value

article_demo_sql_writer_client_id = client.get_secret("article-demo-sql-writer-client-id").value

article_demo_sql_writer_secret = client.get_secret("article-demo-sql-writer-secret").value

sql_cred = ClientSecretCredential(tenant_id, article_demo_sql_writer_client_id, article_demo_sql_writer_secret)

token_obj = sql_cred.get_token("https://database.windows.net/.default")

token_bytes = token_obj.token.encode("UTF-16-LE")

token_struct = struct.pack(f'<I{len(token_bytes)}s', len(token_bytes), token_bytes)

conn_str = (

"DRIVER={ODBC Driver 18 for SQL Server};"

f"SERVER={azure_sql_server_name};DATABASE={azure_sql_database_name};"

"Encrypt=yes;TrustServerCertificate=no;ConnectRetryCount=3;"

)

with pyodbc.connect(conn_str, attrs_before={1256: token_struct}) as conn:

conn.timeout = 5

cursor = conn.cursor()

cursor.execute(

f"""

INSERT INTO [schema].[table] ([column1], [column2], [column3])

VALUES (?, ?, GETDATE())

""",

(itemId, comment)

)

return f"OK"

except Exception as e:

return f"ERROR: {str(e)}"Quick code overview

Before I go over the code, big shout-out to @Riccardo Perico and his blog post, thanks to which I found out, the user identity is now available, or more precisely, we can now access Azure Key Vault in User Data Functions, and we don't have to hardcode secrets in the code or pass them as variables.

Function Definition & Connections

@udf.connection(alias="varlib", argName="varLib")

@udf.generic_connection(argName="keyVaultClient", audienceType="KeyVault")

@udf.function()

def azure_sql_server_writeback(varLib: fn.FabricVariablesClient, keyVaultClient: fn.FabricItem, itemId: str, comment: str) -> str:This part is the most important. The decorators here do the heavy lifting of authentication and networking.

Line 1 (@udf.connection): This establishes the connection to our Variable Library using the alias varlib (which we defined in the "Manage connections" UI). It injects this connection into the function as the argument varLib.

Line 2 (@udf.generic_connection): This is the "hot feature" that allows us to connect to Azure Key Vault. The audienceType="KeyVault" parameter is crucial; it tells Fabric to generate a token specifically scoped for Key Vault access.

Line 4 (The Function): We define the Python function. Note that the argument names (varLib, keyVaultClient) must match the argName parameters in the decorators above.

If you want to do the version without the Variable Library, simply remove the first decorator line and the varLib parameter from the function definition.

Retrieving Configuration

Next, we retrieve the infrastructure details.

key_vault_url = varLib.getVariables().get("azure-key-vault-url")

azure_sql_server_name = varLib.getVariables().get("writeback-azure-sql-url")

azure_sql_database_name = varLib.getVariables().get("writeback-azure-sql-database-name")Here, we define local variables to hold the values fetched from the Variable Library.

For the hardcoded version: If you skipped the Variable Library, you would replace the lines above with hardcoded strings. From this point on, both versions are identical.

Fetching Secrets from Key Vault

Now we handle the security. We use the identity of the UDF (the user or owner) to unlock the Key Vault, and then retrieve the Service Principal credentials.

key_vault_credentials = keyVaultClient.get_access_token()

client = SecretClient(vault_url=key_vault_url, credential=key_vault_credentials)

tenant_id = client.get_secret("tenant-id").value

article_demo_sql_writer_client_id = client.get_secret("article-demo-sql-writer-client-id").value

article_demo_sql_writer_secret = client.get_secret("article-demo-sql-writer-secret").valueFirst, we get the access token from the keyVaultClient. We then initialize the standard Azure SecretClient using that token. Finally, we fetch the three specific secrets we stored earlier (Tenant ID, Client ID, and Client Secret).

Authenticating to SQL Server

sql_cred = ClientSecretCredential(tenant_id, article_demo_sql_writer_client_id, article_demo_sql_writer_secret)

token_obj = sql_cred.get_token("https://database.windows.net/.default")

token_bytes = token_obj.token.encode("UTF-16-LE")

token_struct = struct.pack(f'<I{len(token_bytes)}s', len(token_bytes), token_bytes)We use the Service Principal secrets to request an access token for Azure SQL Database.

However, the SQL driver is a bit particular; it can't just take the token as plain text like Python does. It requires a specific byte format. The last two lines handle this translation, "re-packaging" the token so the driver can read our credentials correctly without throwing an error.

Executing the Write-back

conn_str = (

"DRIVER={ODBC Driver 18 for SQL Server};"

f"SERVER={azure_sql_server_name};DATABASE={azure_sql_database_name};"

"Encrypt=yes;TrustServerCertificate=no;ConnectRetryCount=3;"

)

with pyodbc.connect(conn_str, attrs_before={1256: token_struct}) as conn:

conn.timeout = 5

cursor = conn.cursor()

cursor.execute(

f"""

INSERT INTO [schema].[table] ([column1], [column2], [column3])

VALUES (?, ?, GETDATE())

""",

(itemId, comment)

)

return "OK"

except Exception as e:

return f"ERROR: {str(e)}"Here, we first establish the connection using the pyodbc driver. Once we have that, we can start sending SQL queries, for example, the insert snippet you see above.

Naturally, if you want to build it to be more "chatty," you can change the return statement to something more specific. You could query the data back and display a confirmation message like "ID 1234 saved successfully."

If everything goes according to plan, we simply return the text "OK". If there is any error, we will get the exact thing that went wrong thanks to the Exception handling.

Power BI / Translytical Task Flows setup

Once we publish our UDF, we can implement it in Power BI. Since I already explained the details here, I will forward you there.

Architecture-wise, the Power BI semantic models don't have to be on a Fabric Capacity themselves, and you should use DirectQuery when connecting to the Azure SQL source.

Comments